AI Report #4: AutoGPT And Open-source lags behind Part 2

Hello and welcome to the fourth episode of the AI Report. We aim to inform you with trends in the world of AI— from research to products to everyday life — and throw light on the latest trends. Please subscribe for actionable insights and share this newsletter on social media.

Trends in AI

Open-source lags behind - Part 2

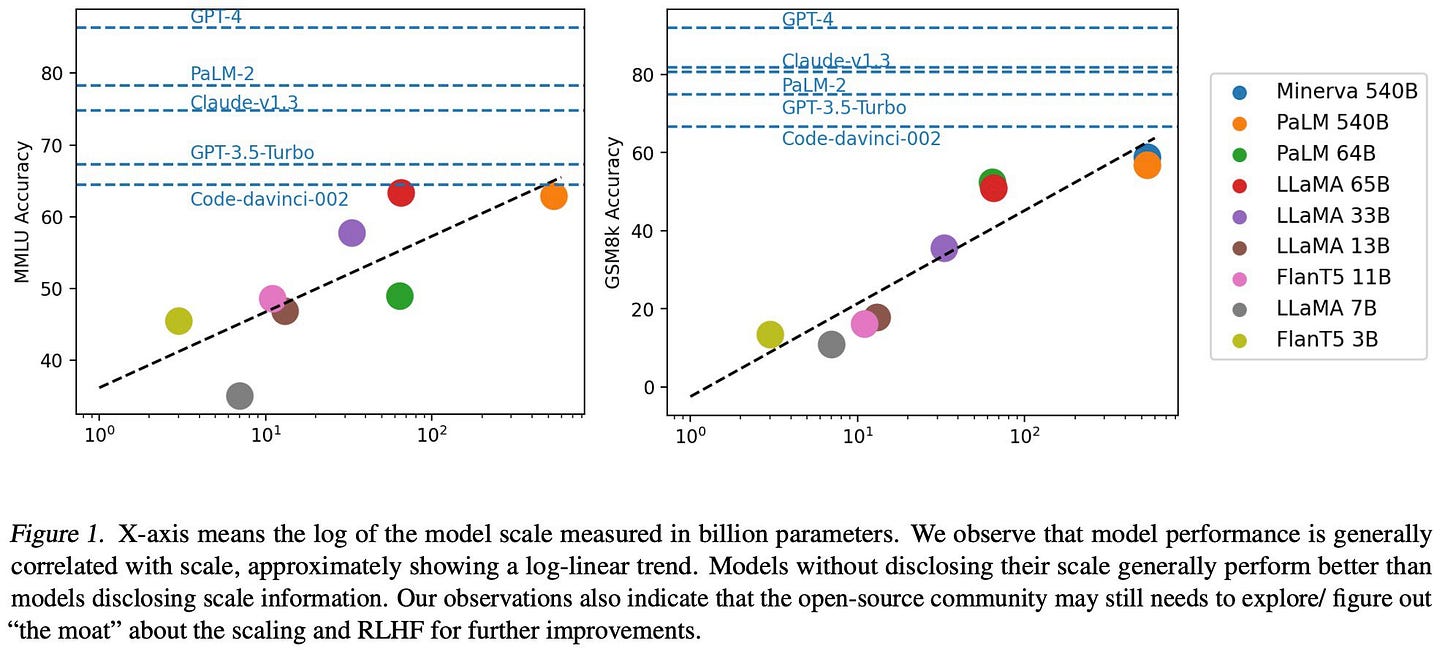

We previously saw that open-source is lagging behind: the models such as Alpaca were mostly mimicking ChatGPT and so we were deceived by the mirage. A different paper shows the gap a bit more clearly on two datasets (MMLU and GSM8K). The paper also posits that the next step that the open-source community should consider is getting better at doing RLHF.

So we have at least the two following directions for the open-source community:

Train a better base LLM

Get better at RLHF

Note that these are based on pre-prints and so let’s use these insights for improving understanding of the direction while keeping an open-mind.

Did AutoGPT succeed or fail?

At the time of this writing AutoGPT has 136k stars in GitHub while PyTorch has only 67k. A repository similar to AutoGPT called BabyAGI has racked up 14k stars as well. But nothing concrete has come out of either AutoGPT or BabyAGI, yet.

So do we call the AutoGPT experiment a failure?

Unfortunately, a lot of AI influencers over-promised on the capabilities (“this is going to replace humans”), while the reality has been that getting these to do anything non-trivial has been, well, extremely non-trivial.

So perhaps we can discount the hype-driven number of stars and examine where these experiments stand.

We are starting to see more research in this area (see the Tool Makers paper below, for example), and we think a lot more research in this area is needed to get AutoGPT and variants to succeed. Make no mistake, these are great initiatives and worth more investment, not less. Let a thousand flowers bloom.

Our personal take is that it might be beneficial to build agents that excel at some really well-defined and small-scale tasks. Eventually it will be helpful to compose these together. We are eager to hear what others think!

Research in AI

📝 [Paper] Small Language Models Improve Giants by Rewriting Their Outputs

LLMs, while powerful, often don't do as well on difficult tasks as models specifically trained for those tasks. Additionally, their huge size and restricted access can make them hard to fine-tune for specific tasks. They also often need careful and time-consuming adjustment of their prompts. To solve these issues, the paper introduces a method that improves the results from the LLM without needing to adjust its internal workings. The method uses a smaller language model, called LMCor, that ranks, merges, and rewrites suggestions from the LLM to create a better final output. Experiments have shown that even a small LMCor can greatly enhance the LLM's performance on a variety of tasks. It also reduces the need for careful prompt adjustment and can be used with any LLM, improving its performance.

📝 [Paper] Large Language Models as Tool Makers

This research presents a new method where advanced computer programs (LLMs) can create and use their own tools to solve problems more efficiently. They use a bigger model (e.g. GPT-4) to make the tools, and a smaller model (e.g. GPT-3.5) to use them. This system was tested and found to be nearly as effective as using a high-power model for everything, but at a much lower serving cost.

This is a paper from Google DeepMind.

📝 [Paper] Let’s Verify Step by Step

They compare two training methods: one that provides feedback on the final result (outcome supervision), and another that guides the program through each step (process supervision). Using a challenging math problem set for testing, they found that the step-by-step approach was significantly more effective. Their best model was able to solve 78% of the test problems using this method. The research also demonstrated that active learning - where the program learns as it goes - improved this step-by-step method even more. They're releasing their dataset of 800,000 step-level feedback examples for other researchers to use here.

Paper from OpenAI. See announcement.

Please also follows us at @the_ai_report on Twitter. We have a favor to ask you: please take a minute to share this on social media!

Help us also understand what kind of content is helpful. Do you want to see more of papers, or something else?